The LA Times recently ran an article about Elon Musk of lower than normal editorial standards. The article by Russ Mitchell titled, “Twitter bots helped build the cult of Elon Musk and Tesla. But who’s creating them?” is solely based on “preliminary research” by David A Kirsch. This article is adapted from the below video.

Kirsch, is an Associate Professor of Management and Entrepreneurship at The University of Maryland. He has a Ph.D. in history and has published a fair number of academic papers, but absolutely none of those papers are about social media bots. This paper the LA Times wrote about doesn’t seem to exist, at least in the ways that count.

Bad Preprint Papers Shouldn’t Get Media Coverage

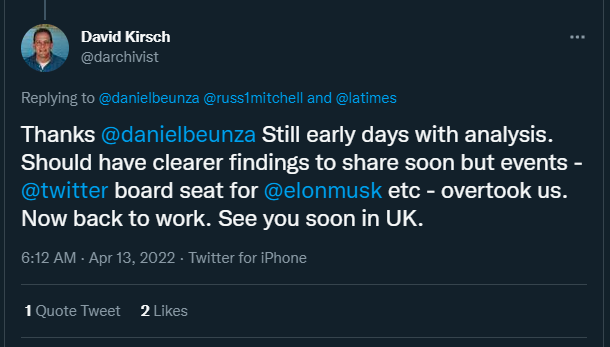

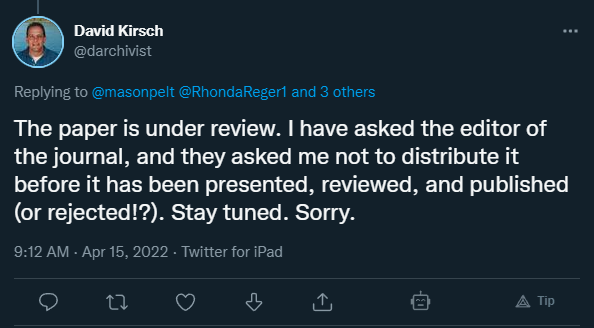

The paper is not yet peer-reviewed, is not publicly available in preprint, and (at the time of recording) some of professor Kirsch’s Tweets lead me to believe the paper isn’t something that could be shared, as it still seems to be developing.

After recording, Kirsch made other statements on Twitter regarding the paper’s status that were, to my mind, incompatible. One Tweet says, “[s]till in the early days with analysis.” another said, “The paper is under review.” and that the unnamed journal’s editor asked him not to distribute it. It seems weird that while Kirsch was able to speak about the paper to the LA Times, he’s prohibited from answering basic questions about his bot detection methodology.

I and others have serious doubts about the validity of Kirsch’s data. Based on both the LA Times article and a Twitter thread by Kirsch, the tool Botometer was used for bot detection. While I use Botometer myself, it is a fundamentally unreliable tool and is certainly not equipt to be the arbiter of humanity for Kirsch’s use case.

Botometor Is Flawed

The Botometer tool is well documented as unreliable. A preprint (you can criticize the fact that it’s a preprint) on the SSRN server by Florian Gallwitz and Michael Kreil attempted to validate the “bot” accounts published in peer-reviewed studies and, “were unable to find a single ‘social bot’. Instead, we found mostly accounts undoubtedly operated by human users.”

A peer-reviewed study titled “The False positive problem of automatic bot detection in social science research” published in the Open Access journal PLOS ONE found a high number of both false positives and false negatives resulting from Botometer’s use in identifying accounts. Another peer-reviewed study, “Bot, or not? Comparing three methods for detecting social bots in five political discourses” published in Big Data & Society, also noted heavy limitations with Botometer’s reliability.

Misusing A Tool

For some purposes, like estimating the number of bots within a large enough data set, you can argue that the false positives and false negatives cancel each other out. Indeed as a general barometer, Botometor works well. We use it for limited purposes at Push ROI, and I’ve used it for some of my writing.

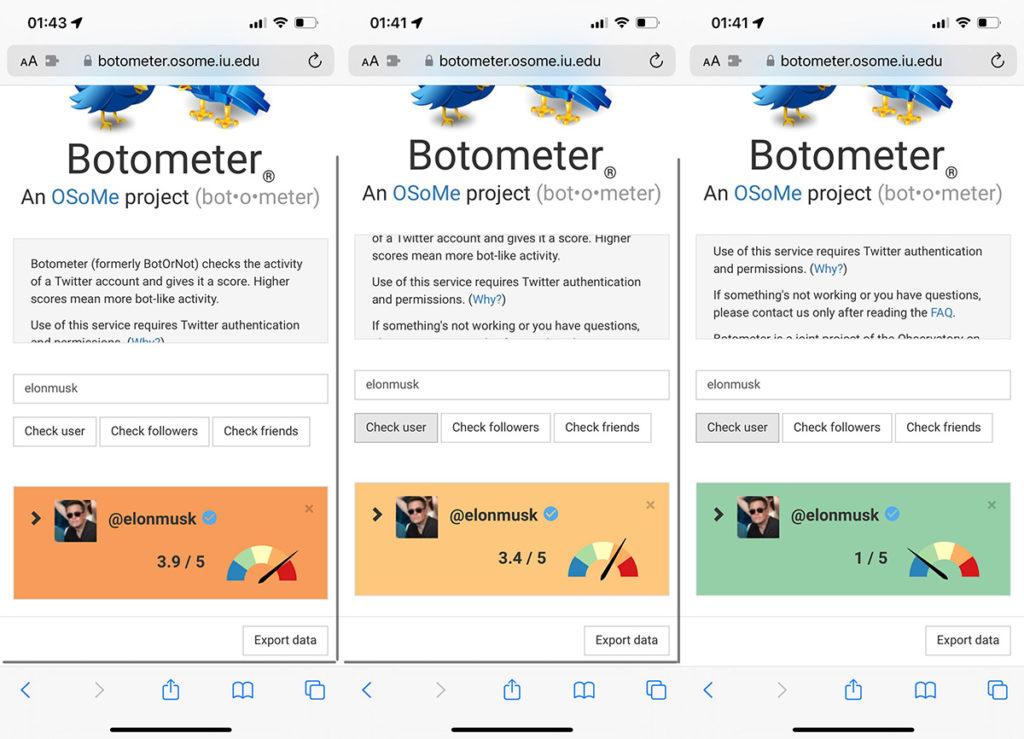

Botometor is not a bad tool; it is a flawed tool. Few things make that point so well as Florian Gallwitz’s Tweet showing a series of screenshots of Botometor’s evaluations of Elon Musk taken only a few minutes apart. The bot rating of Musk’s Twitter was dramatically different over a short period.

https://twitter.com/FlorianGallwitz/status/1514028268361592839

So there are some flaws to the tool. And now an LA Times article with what I would term journalistic speculation using phrases like “according to”, “based on”, “there is reason to believe”, &/C that all hang on that single unreliable tool is out in the world. At the least, it isn’t an article based on “preliminary research” for cancer, where the author refuses to share his findings, and where a large number of oncologists question the stated methodology.

Elon Musk Has A Weird Nerd Army

Back to this paper, let’s examine Elon Musk’s relationship with his fans. I can think of no better description than the meme of a Simpsons character jumping in front of a bullet. Weird nerds protecting Elon Musk from valid criticism.

With that fan relationship in mind, considering the “bot” behavior Kirsch shared with the LA Times and the efficacy of Botometer, I don’t think a botnet is likely to be most of what the “paper” uncovered. I think it’s more likely weird nerds triggered false positives on a flawed bot testing tool.

Think about it: many weird nerds, not just using their main account but logging into their backup and burner accounts. It’s not even particularly uncommon to operate more than one account. Perhaps someone wants to avoid pulling a Ted Cruz and liking an adult video on main, or they like to troll.

I’m not saying no bot accounts pushed Tesla. It’s Twitter; I’m sure bots said many things. But the speculation that Elon Musk or his agents used botnets to push up tesla stock or build his cult of personality is not well supported. That claim is based on research that isn’t reviewed, isn’t published as a preprint (so it can’t be analyzed by others), uses a flawed tool for bot identification, and is orchestrated by someone whose research area is not generally social bots.

This article is adapted from the below video.

Mason Pelt is the founder of Push ROI. First published in PushROI.com on April 15, 2022. Photo “robots” by jmorgan is marked with CC BY-SA 2.0.